User-centric and task-driven: a better way to automate

Too often, our acceptance tests end up as sequences of "click"s and "select"s running against a web application. This makes our tests hard to understand and hard to maintain. User-centric, task-driven test automation shows us a better way.

When we learn something new, it is easy to get stuck in a low-level, details-focused way of thinking. Suppose you take a course in woodworking. First you will learn how to use different types of hand and power tools. Next you might learn when to use a nail and when to use a screw, and how to use a block plane. As you gain experience, you will learn about different wood types. And with time, you will know what nails and screws to use, and whether to choose white pine or mahogany, to build your next chest.

This is all a normal part of any learning process, and applies to anything from cooking to karate. You need to master the basic moves before you can put them together to do something bigger. Only when you no longer need to occupy your mind with the fundamentals, when the moves become a part of your natural flow, can you start to reason about the bigger picture.

Because your real goal is to create something useful. You want to build a chest, a table, or a stool, not a pile of wood chippings on the floor.

Wood carving, karate, and test automation

If you are serious about learning woodwork, with practice you will come to know when to pick up the hammer and when to choose the screwdriver. You will know when to sand and when to plane. You will think “now I will add the lid to the chest”, and not “now I will attach two hinges with four screws, about 5cm from the edge of the box”.

But when we write automated acceptance tests, we frequently get stuck thinking about the low level details. We keep our focus fixed on button clicks and input fields, the UI equivalent of nails and screws. We forget the bigger picture (testing and documenting what the user is trying to achieve), and get lost in the detail (what button should we click, or what should appear in this dropdown). We write code that looks like this:

$(“#ccapp input[name=‘firstname’]).sendKeys(“James”); $(“#ccapp input[name=‘lastname’]).sendKeys(“Smith”); $(“#ccapp input[name=‘dest’]).selectByLabel(“Norway”); $(“#ccapp input[name=‘triplen’]).sendKeys(“10”); $(“#ccapp button[type=‘submit’]).click();

And this makes our tests much harder to understand, and much much harder to maintain.

Pages Objects to the rescue?

Many teams use the Page Object pattern to reduce duplication and make maintenance easier. Page Objects raise the level of abstraction from fields and buttons, to screens and web pages. A Page Object hides the details of how to locate an element behind a friendlier-looking API. So that rather than writing the code shown above, we might write something like:

applicationForm.enterFirstName(“James”); applicationForm.enterLastName(“Smith”); applicationForm.enterDestinationCountry(“Norway”); applicationForm.enterTripLength(10); applicationForm.clickOnSubmit();

Page Objects are certainly an improvement on raw scripting. They help avoid duplication by placing the locators of a page in a central place, rather than in each test script. But in reality, Page Objects are just one level of abstraction above the clicks and selects in the first example. Page objects still reason in terms of how the user achieves a task, not what task they are performing.

User-centric and task-driven: How, What, Why and Who

The primary role of automated acceptance tests is to illustrate how a user interacts with the system. They should give us more confidence that the application does all that we expect of it. This role as feedback and living documentation tools is critical; if you can't tell what a test does in functional terms, how can you have confidence when it passes? And how can you know what to do with the test if it fails?

The examples we saw earlier describe a sequence of interactions with a web page. They describe how we are testing the application in great detail, but they are still weak in describing what feature we are testing. And they don't give us any context about why the test is valuable in the first place.

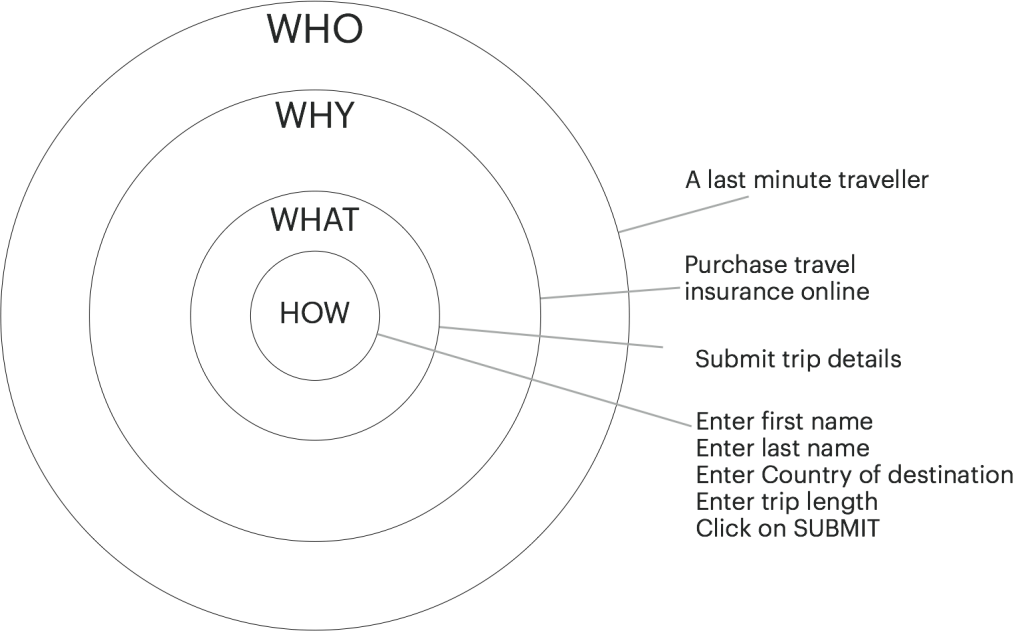

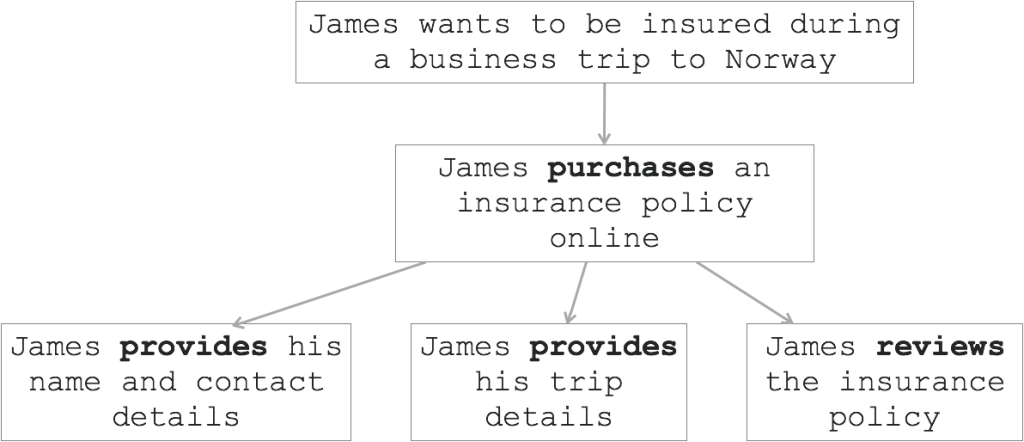

So rather than reasoning about what values we enter in the various fields, we could first reason about what task the user wants to perform (“to submit trip details”). We should understand why she is performing this task (“she is purchasing travel insurance online”), and even who she is (“a last-minute traveller”).

This approach is deeply rooted in the ideas of User-Centred Design (see "A bit of UCD for BDD & ATDD: Goals -> Tasks -> Actions" and "User-Centred Design: How a 50 year old technique became the key to scalable test automation"). We see the world not in terms of screens and objects, but in terms of actors with goals, who perform tasks to achieve these goals.

Nouns and Verbs

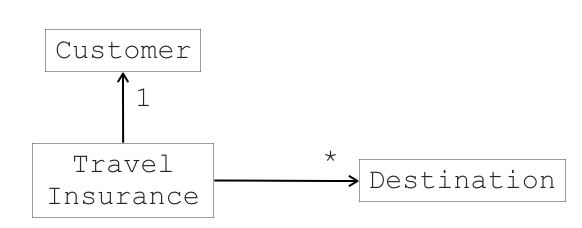

Another way of thinking about user-centric test design is in terms of what we model. When we build an application, we reason in terms of domain objects. We might reason in terms of Customers, Destinations, and Travel Insurance Policies. And when we implement the application, we might think about the screens and fields that will represent these concepts.

When we design an application, we model the application domain. The key concepts are NOUNS.

But when we test an application, we are not modelling the application itself. We are modelling how users interact with the application. Rather than modelling customers, trips and destinations, we should model how a customer provides details about their travels in order to obtain an insurance policy that covers a trip to a particular destination.

When we design a test suite, we model user goals, tasks and interactions. The key concepts are VERBS.

In other words:

| When we work with... | The application | The test suite |

|---|---|---|

| We model | The application domain | How the user interacts with the application |

| Our vocabulary is focused on | Domain objects | Goals, tasks and interactions |

| The most important concepts are | Nouns | Verbs |

User-centric task-driven test automation comes in many forms. If we described this requirement as a well-written Cucumber scenario, we might get something like the following:

Feature: Purchase travel insurance online Scenario: Purchase travel insurance for a trip within the EU Given James has provided details for a business trip to Norway lasting 10 days When he reviews the proposed insurance policy Then the total price should be 20 euros

Note how there is no mention of screens or fields - we deal only in terms of business goals and tasks.

But we could also automate this scenario directly in our test automation code, but still in terms of the user experience, rather than in terms of UI interactions or web pages. The Screenplay pattern is one example of an approach that helps testers think about their tests at a higher level.

You can work with Screenplay in any language, but Serenity BDD provides ready-to-go support for Screenplay in Java and Javascript. Here is an example in Java:

james.attemptsTo( ProvideContactDetails.withFirstName(“James”).andLastName(“Smith”), ProvideTripDetails.forA(Business).tripTo(“Norway”).lasting(10).days(), ReviewProposedInsurancePolicy.summary() ) james.should(seeATotalPrice(), equalTo(20.00))

Notice how, in the code above, the objects (ProvideContactDetails, ProvideTripDetails, and ReviewProposedInsurancePolicy) don't represent page objects or domain objects, but rather tasks and actions that the user can perform.

As can be seen here, the resulting tests are also much easier to read.

Screenplay test suites are also more reusable and easier to maintain. In one recent project, we saw 70% more reuse once the team had switched over to test automation using the Screenplay pattern. You can learn more about Screenplay and it’s implementation in Serenity BDD in Beyond Page Objects with Serenity Screenplay.

Conclusion

Tests that are written using a user-centric, task-driven approach are more readable and easier to understand than those relying on low level UI interactions. They are also more reusable. While Page Objects allow you to reuse logic to interact with a web page, a user-centric task-driven approach allows reusability not simply of fields and buttons, but of entire business tasks and flows. For larger applications, this allows much higher rates of reuse, and also much more stable components.

Thanks to Jan Molak, author of Serenity/JS, for his help in reviewing this article.

RELATED EVENTS AND WORKSHOPS

John Ferguson Smart is coming to Dublin!

John Ferguson Smart, author of BDD in Action and well-known BDD thought leader, will be returning to Dublin in June for an exclusive tour of seminars, meetups and workshops. BOOK YOUR SPOT HERE.

BDD in Action: Mastering Agile Requirements

Focus on the features your customers value the most by building a shared understanding of the real business needs, and empower your teams to deliver more optimal and more reliable solutions sooner!

Focus on the features your customers value the most by building a shared understanding of the real business needs, and empower your teams to deliver more optimal and more reliable solutions sooner!

Advanced BDD Test Automation Workshop

Set your team on the fast track to delivering high quality software that delights the customer with these simple, easy-to-learn sprint planning techniques! Learn how to:

Set your team on the fast track to delivering high quality software that delights the customer with these simple, easy-to-learn sprint planning techniques! Learn how to:

- Write more automated tests faster

- Write higher quality automated tests, making them faster, more reliable and easier to maintain

- Increase confidence in your automated tests, and

- Reduce the cost of maintaining your automated test suites